Ethical Data Analytics – what every business needs to know

- nathanvankan8

- May 11, 2021

- 4 min read

Updated: Mar 29, 2024

Every company has customer data. The question is, what can they do with it? Which activities lie within the bounds of legal and ethical rules and morals, and which don’t? If companies haven’t historically been careful about how they leverage customer data, they certainly are now. A raft of new global legislation (such as GDPR here in Europe) has put this issue top of mind for every company that stores any data about their customers and uses it to send marketing emails.

Whether your company is big enough to have a team of data scientists using self-learning algorithms, or just one analyst slicing and dicing data in the back room, data analytics can help improve business performance by enabling you to make data-driven decisions about key business processes. But no matter the size, if your company uses customer data to make customer-facing decisions, there are ethical issues that need to be considered.

When ‘Know Your Customer’ goes too far

Any decision or action based on customer data can lead to potentially damaging personal consequences, ranging from merely uncomfortable to life-altering. By 2012, the U.S. retail giant Target had already figured out how to data-mine its way into a woman’s womb, learning that a customer had a baby on the way long before they needed to start buying diapers.

The impact of such insights can be particularly damaging if it 1) has a profound influence over a person’s life (think banking), or 2) has even a shallow influence over masses of people (think social media). In the first instance, banks that use data analytics to make decisions about, for example, who gets a mortgage and at which rate, can have a lasting and potentially harmful impact on someone’s life. And in the second scenario, the Facebook/Cambridge Analytica political influence scandal from 2016 provided a clear roadmap of how applied data analytics can influence mass behavior at the ballot box.

Whether or not decisions have a direct impact on another human being’s life, every company needs to think in terms of the unconscious biases that may be cropping up in their algorithms, as this can have a negative impact on brand. A few years ago, a Google image recognition AI erroneously denoted humans as gorillas, Microsoft’s chatbot Tay became too offensive for Twitter within 24 hours, and Amazon’s hiring algorithm developed a distinct gender bias.

For all of these reasons, a leaked proposal circulating online recently revealed that the European Union is considering banning the use of artificial intelligence for a number of purposes, including mass surveillance and social credit scores. However, it is important to note that GDPR already strongly insists on transparency, as consumers have a ‘right to explanation’ for automated decisions imposed upon them.

Ethical frameworks to consider

Regardless of what regulations exist (or eventually arise) to mitigate unfair algorithmic behavior, data scientists are on the front lines of ethical thinking with regard to data analytics. Fortunately, there are a number of public frameworks that can be consulted by data scientists or anyone in a company who wants to avoid any legal or moral entanglements that may arise from using automated and/or data-driven decision making.

At the highest level, behaving ethically means not harming or creating a disadvantage for other people, either directly or indirectly. As in the examples of unconscious bias above, even companies trying to do good in an objective way may end up on the wrong side of an ethical issue and be publicly condemned. Therefore, before starting any data analytics use case, it is recommended to take a step back and consider the ethically relevant factors of the case.

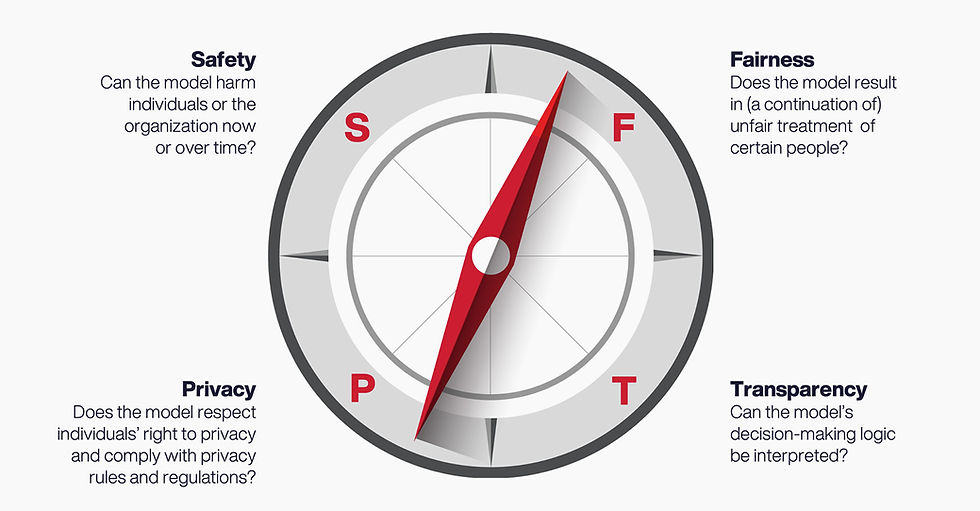

Large (governmental and non-governmental) organizations such as Google, the EU and the UN have published guidelines for using data/AI/ML (Machine Learning), which can provide ethical guidance when working with data and analytics. But to keep you from having to consult all of these, a meta study on 39 different authors of guidelines shows a strong overlap in the following topics: 1) Privacy; 2) Safety and security; 3) Transparency, accountability and explainability; 4) Fairness and non-discrimination.

A practical guide to avoiding ethical missteps

IG&H has distilled these guidelines into an Ethical Risk Quick Scan that highlights areas in need of particular attention and can help you assess the risks that may arise from a particular use case. The scan consists of a series of questions concerning things such as who will be impacted by the decisions made, how will they be impacted, and to what extent?

For example: will you be using the data to make decisions that may have an impact on the financial well-being of a population that is considered vulnerable? If so, how might their personal behavior be impacted? And how long will it take before you are able to measure whether the algorithm may be causing an undesirable effect?

In our next Ethical Data Analytics blog, we’ll dive a little deeper into the Ethical Risk Quick Scan and describe the framework IG&H uses to help businesses mitigate potential issues that appear as a result of the scan.

Author

Tom Jongen